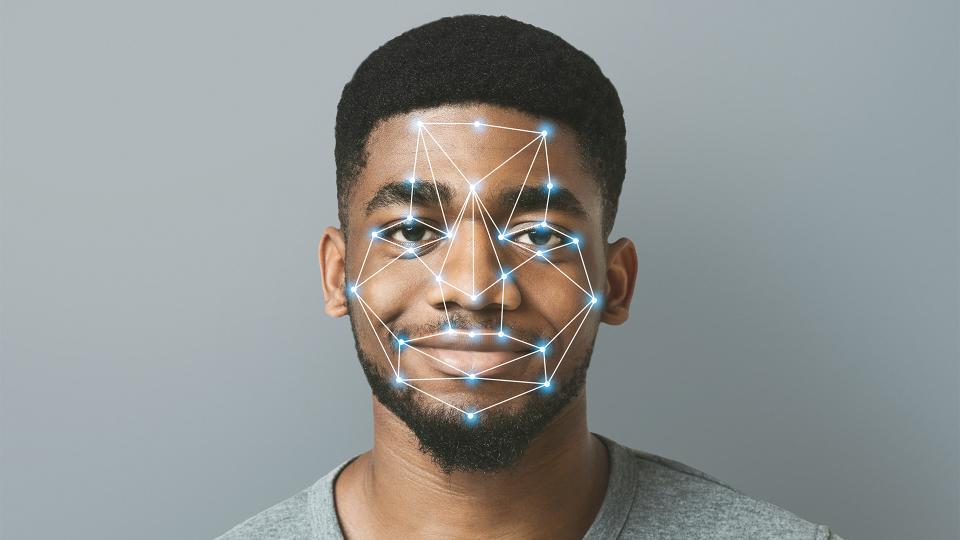

Facial recognition is the ability to scan, analyze, and recognize faces. It can come with complications – especially if you’re Black. That’s what Lauren Rhue, assistant professor of information systems at the University of Maryland’s Robert H. Smith School of Business has found. Her research shows facial recognition for emotions displays racial disparities.

Using a set of photos of NBA players, Rhue compared emotional analysis of them from two different facial recognition services, Face++ and Microsoft AI. She says, “both services interpret black players as having more negative emotions than white players. Face++ consistently interprets black players as angrier than white players, controlling for their degree of smiling. Microsoft registers contempt instead of anger, and it interprets Black players as more contemptuous when their facial expressions are ambiguous. As the players’ smiles widen, the disparity disappears.”

Other research of this type has led to similar results and in June, Microsoft decided to stop using features of its facial recognition software that detect emotion. An NBC News article, Microsoft is removing emotion recognition features from its facial recognition tech, cites Rhue’s research.

As more companies and government agencies use facial recognition technology, criticism of how the tech analyzes people of color is also on the rise. CNN reports several Black men have been wrongfully arrested due to the use of facial recognition software.

There are also implications regarding the software when it comes to many facets of everyday life, like navigating the world of work.

“There’s been some interesting work that looks at emotional labor, saying that essentially African Americans often have to have more exaggerated positive emotions in order to be perceived at the same level of positive as others,” says Rhue. “For example, a sales associate might have to be just over the top friendly and (have) huge smiles in order to be recognized as being pleasant.”

Rhue, whose body of research explores the economic and social implications of technology, feels her findings on emotion-recognition tech are a reminder that disparities in artificial intelligence often mirror the disparities in the offline world.

Read more on this working paper: Racial Influence on Automated Perceptions of Emotions.

Media Contact

Greg Muraski

Media Relations Manager

301-405-5283

301-892-0973 Mobile

gmuraski@umd.edu

Get Smith Brain Trust Delivered To Your Inbox Every Week

Business moves fast in the 21st century. Stay one step ahead with bite-sized business insights from the Smith School's world-class faculty.